Squiggle AI: An Early Project at Automated Modeling

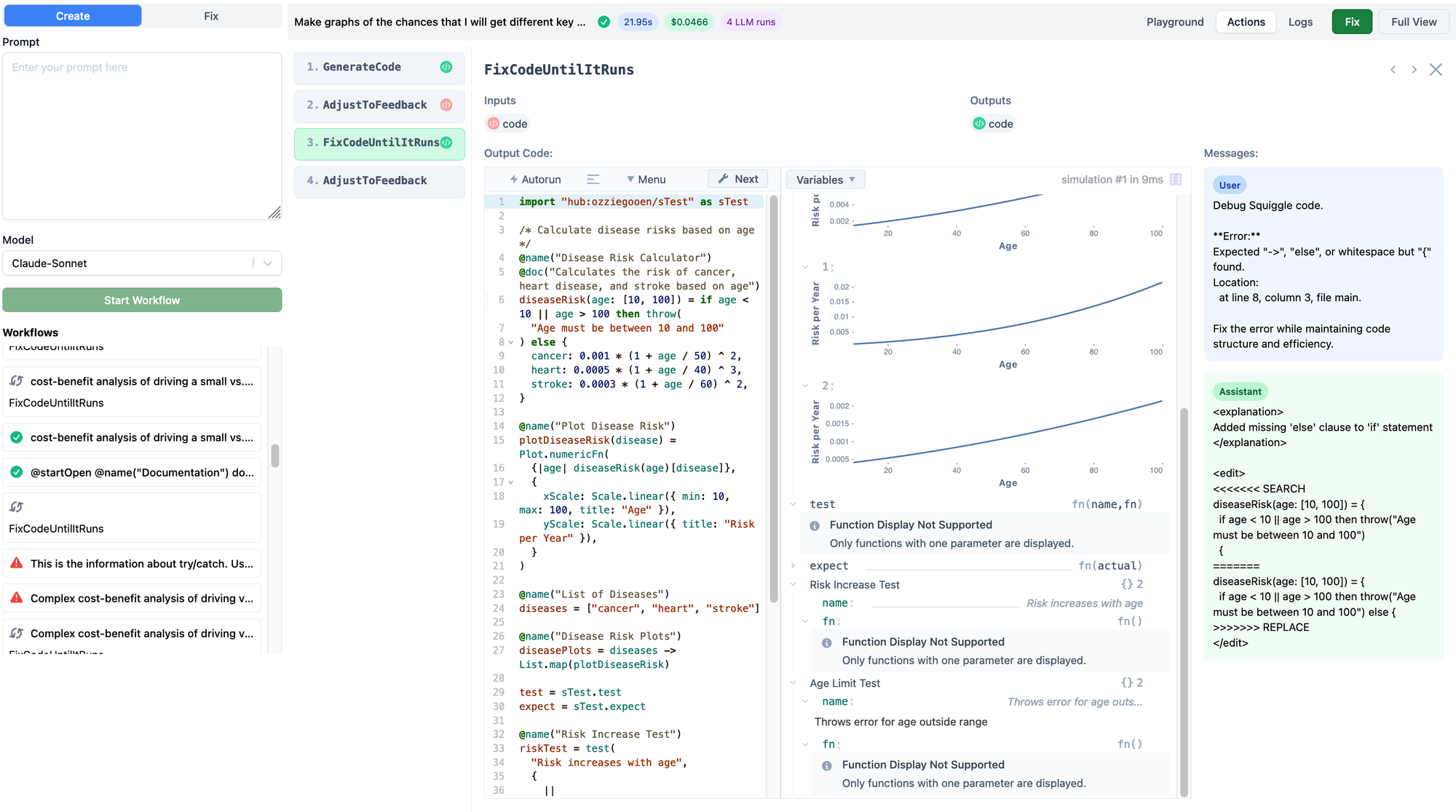

We're releasing Squiggle AI on SquiggleHub. Squiggle AI runs long sequences of LLM calls in order to write, fix, and improve Squiggle code.

Squiggle AI is a significant improvement over our previous experiments with Squiggle GPT and simple Claude prompts, offering more reliability and customization options.

It's free on SquiggleHub, but you're also welcome to download it locally, customize it, and run with your own Anthropic or OpenAI tokens.

We encourage you to try it out, especially for estimates you've been postponing or are curious about. Be creative! Any feedback is highly appreciated.

Some Potential Uses

- Cost-benefit analyses. Cost-benefit calculations can be fairly generic, making them amenable to automation. Try asking for estimates of things in your life, organizations, or large-scale interventions.

- Forecasting Assistance. Some forecasting questions are particularly amenable to fermi estimates. This whole process can be automated (you use Squiggle AI as part of an existing AI forecasting workflow), or can simply be used for assistance.

- Scorable functions. These can be intimidating at first, but AI can do a lot of the code-writing parts of it. It would be neat to see experimenters run scorable function tournaments - and in these cases, AI could be used to help forecasters actually write well typed functions.

Strengths & Weaknesses

Squiggle AI is aimed primarily at debugging and fixing syntax issues in Squiggle code by running sequences of efficient LLM calls. While it ensures code is syntactically correct, it currently places less emphasis on semantics, visualizations, or enhancing readability. As a result, the code it generates often works is likely to include unconventional assumptions or be challenging to interpret.

We chose to focus on syntax correction initially because getting the syntax right is essential for models to load and run properly. While other tools are better in logical reasoning and structuring, Squiggle AI uniquely offers a decent success rate in producing valid Squiggle code.

For tasks like retrieving information from the web or refining the broader structure of your code, we recommend using complementary tools such as Perplexity, ChatGPT 01, and Metaforecast. You can paste web news results into Squiggle AI, or you can take Squiggle AI outputs, ask other models to improve them in different ways, then bring the results back to Squiggle AI for syntactic fixes.

Current Limitations

- 3-minute runtime limit

- $0.30 cost limit

- Single file operations only

- Soft limit of ~70 lines of code for new generation

- Occasional failures requiring multiple runs or human intervention

- Limited access to SquiggleHub libraries

Technical Insights

Under-the-hood, Squiggle AI leverages several key attributes and features to achieve its reliability. Put shortly, these are:

- Prompt caching with Claude for cost-effective queries

- Iterative error correction

- Custom checks for common Squiggle mistakes

- Real-time code execution and result feedback

- A built-in testing library

- Code diff approach for modifications

- Markdown format for run logs

These techniques allow us to overcome challenges like LLMs' unfamiliarity with Squiggle and the need for functional programming approaches.

Going Forward

It's taken us a few months to create Squiggle AI as it is so far. We've learned a lot from this, and have a bunch of ideas about where to take things next. Stay tuned for further writing and functionality.

Enjoy!