Quantified Uncertainty Research Institute

QURI is a nonprofit research organization researching forecasting and epistemics to improve the long-term future of humanity.

Opinion Fuzzing: A Proposal for Reducing & Exploring Variance in LLM Judgments Via Sampling

Summary LLM outputs vary substantially across models, prompts, and simulated perspectives. I propose "opinion fuzzing" for systematically sampling across these dimensions to quantify and understand this variance. The concept is simple, but making it practically usable will require thoughtful tooling. In this piece I discuss what opinion fuzzing

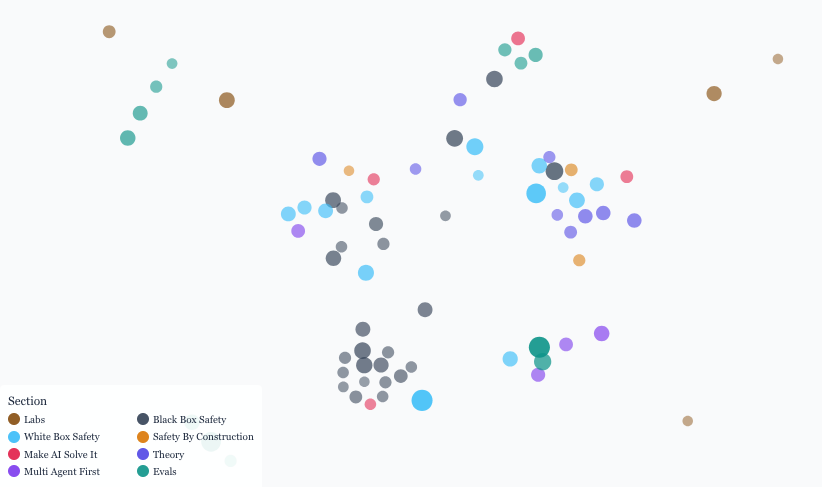

New Collaboration: Shallow Review of Technical AI Safety, 2025

We recently collaborated with the Arb Research team on their latest technical AI safety review. This document provides a strong overview of the space, and we built a website to make it significantly more manageable. The interactive website: shallowreview.ai The review examines major research directions in technical AI safety

Announcing RoastMyPost

Today we're releasing RoastMyPost, a new application for blog post evaluation using LLMs. Try it Here TLDR * RoastMyPost is a new QURI application that uses LLMs and code to evaluate blog posts and research documents. * It uses a variety of LLM evaluators. Most are narrow checks: Fact Check,

Beyond Spell Check: 15 Automatable Writing Quality Checks

I've been developing RoastMyPost (currently in beta) and wrestling with how to systematically analyze documents. The space of possible document checks is vast, easily thousands of potential analyses. Building on familiar concepts like "spell check" and "fact check," I've made a taxonomy

Updated LLM Models for SquiggleAI

We've upgraded SquiggleAI to use Claude Sonnet 4.5, Claude Haiku 4.5, and Grok Code Fast 1. This is a significant upgrade over the previous Claude Sonnet 3.7 and Claude Haiku 3.5. All three are available now. Initial testing shows meaningful improvements in code generation

Shape Squiggle's Future: Take our Squiggle Survey

Dear Squiggle Community, At QURI, we're focused on tools that advance forecasting and epistemics to improve decision-making. As you know, we care deeply about evaluation, and we're holding a survey on Squiggle to better understand how and why people use our work. Honestly, developing this tooling

A Sketch of AI-Driven Epistemic Lock-In

Epistemic status: speculative fiction It's difficult to imagine how human epistemics and AI will play out. On one hand, AI could provide much better information and general intellect. On the other hand, AI could help people with incorrect beliefs preserve those false beliefs indefinitely. Will advanced AIs attempting

Evaluation Consent Policies

Epistemic Status: Early idea A common challenge in nonprofit/project evaluation is the tension between social norms and honest assessment. We've seen reluctance for effective altruists to publicly rate certain projects because of the fear of upsetting someone. One potential tool to use could be something like an

Recent Updates

Squiggle AI & Sonnet 3.7 We've updated Squiggle AI to use the new Anthropic Sonnet 3.7 model. In our limited experimentation with it so far, it seems like this model is capable of making significantly longer Squiggle models (roughly ~200 lines to ~500 lines), but that

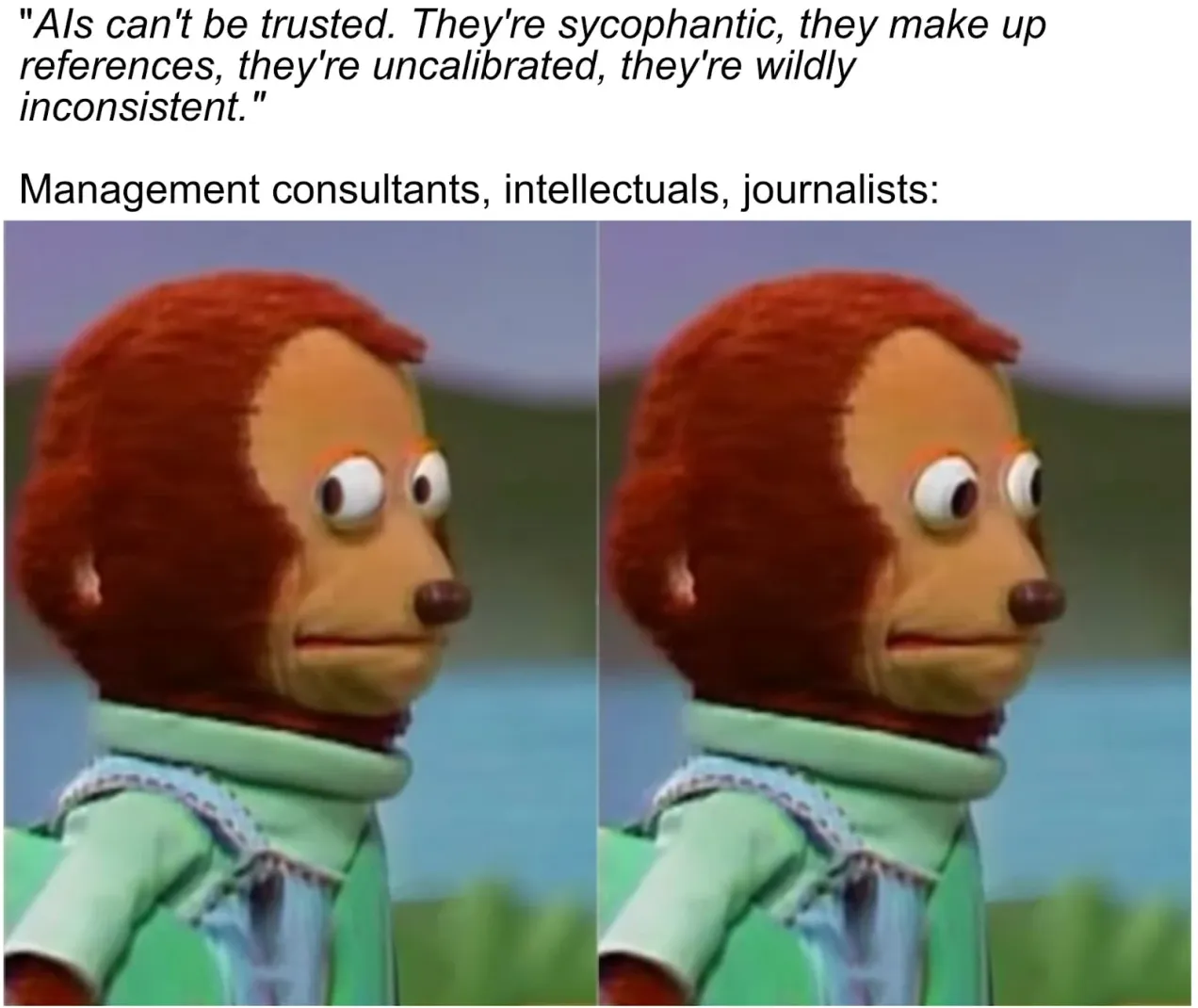

6 (Potential) Misconceptions about AI Intellectuals

Update I recently posted this to the EA Forum, LessWrong, and my Facebook page, each of which has some comments. Epistemic Status A collection of thoughts I've had over the last few years, lightly edited using Claude. I think we're at the point in this discussion

$300 Fermi Model Competition

We're launching a short competition to make Fermi models, in order to encourage more experimentation of AI and Fermi modeling workflows. Squiggle AI is a recommended option, but is not at all required. The ideal submission might be as simple as a particularly clever prompt paired with the

Squiggle 0.10.0

After a six-month development period, we’ve released Squiggle 0.10.0. This version introduces important architectural improvements like more robust support for multi-model projects and two new kinds of compile-time type checks. These improvements will be particularly beneficial as laying a foundation for future updates. This release also includes