Quantified Uncertainty Research Institute

QURI is a nonprofit research organization researching forecasting and epistemics to improve the long-term future of humanity.

QURI-related Questions, on Manifold Markets

Recently, I’ve experimented with writing questions on Manifold. I find this the most accessible forecasting platform to ask questions quickly. Many of these questions can be better asked and resolved, but I think doing miniature versions is often better than waiting for polished versions. Questions can always be improved

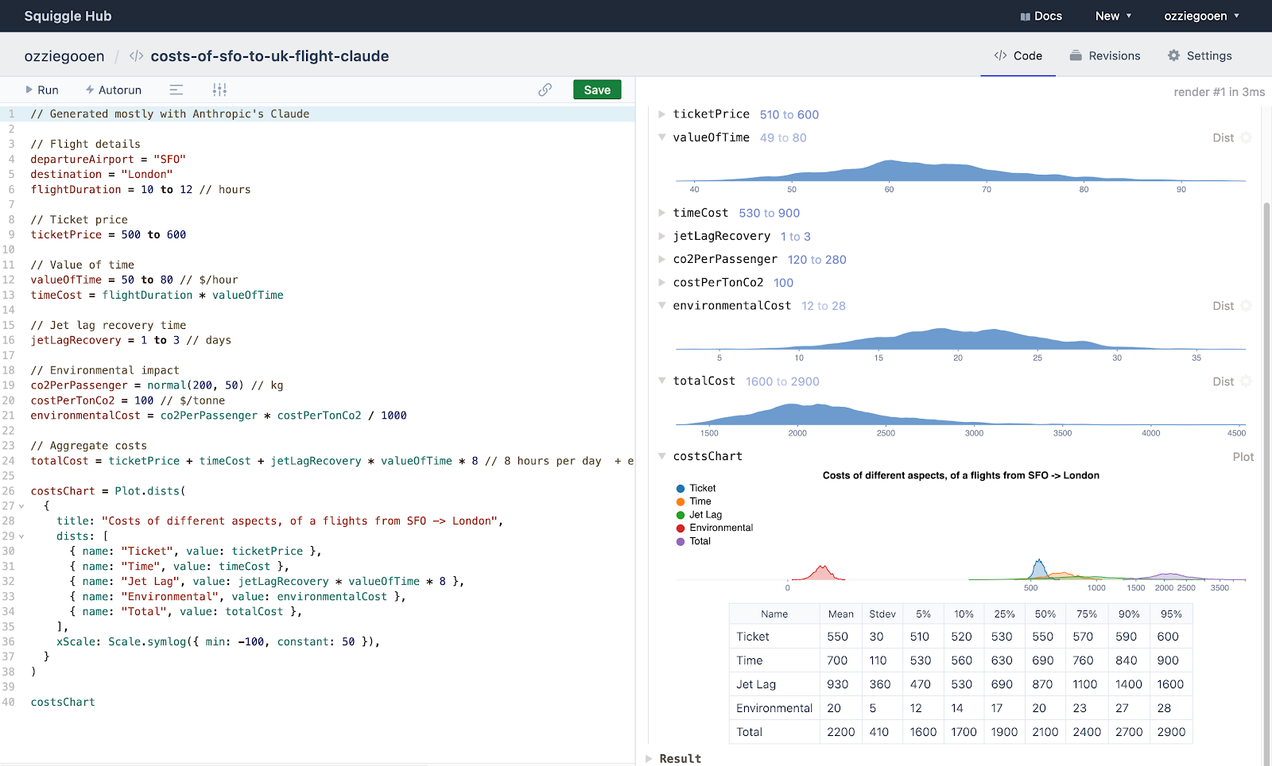

Open Technical Challenges around Probabilistic Programs and Javascript

While working on Squiggle, we’ve encountered many technical challenges in writing probabilistic functionality with Javascript. Some of these challenges are solved in Python and must be ported over, and some apply to all languages. We think the following tasks could be good fits for others to tackle. These are

Using Points to Rate Different Kinds of Evidence

There’s a lot of discussion on the EA Forum and LessWrong about epistemics, evidence, and updating. I don’t know of many attempts at formalizing our thinking here into concrete tables or equations. Here is one (very rough and simplistic) attempt. I’d be excited to see much better

Squiggle 0.8.4

Private Models and UI improvements

Announcing Squiggle Hub

A free new platform for writing and sharing Squiggle code

Squiggle 0.8

A much better editor and viewer, function annotations, and lots more

Squiggle: Technical Overview (2020)

This post was originally published on Nov 2020, on LessWrong. We’re moving this document here, to centralize our writing in one place. This piece is meant to be read after Squiggle: An Overview . It includes technical information I thought best separated out for readers familiar with coding. As such,

Squiggle Overview (2020)

This post was originally published on Nov 2020, on LessWrong. We’re moving this document here, to centralize our writing in one place. I’ve spent a fair bit of time over the last several years iterating on a text-based probability distribution editor (the 5 to 10 input editor in

The Squiggly language (Short Presentation, 2020)

A short presentation from 2020 about a very early version of Squiggle

Downsides of Small Organizations in EA

Recently I partipated in the EA Strategy Fortnight, with this post on the EA Forum. There are some good comment threads there I suggest checking out. Note: Our main work recently has been on making a “Squiggle Hub” that we intend to announce in the next few weeks, so there’

Relative values for animal suffering and ACE Top Charities

tl;dr: I present relative estimates for animal suffering and 2022 top Animal Charity Evaluators (ACE) charities. I am doing this to showcase a new tool from the Quantified Uncertainty Research Institute (QURI) and to present an alternative to ACE’s current rubric-based approach. Introduction and goals At QURI, we’

Ozzie, on the Mutual Understanding Podcast

Hear me ramble on coordination, government, and estimation systems