Relative values for animal suffering and ACE Top Charities

tl;dr: I present relative estimates for animal suffering and 2022 top Animal Charity Evaluators (ACE) charities. I am doing this to showcase a new tool from the Quantified Uncertainty Research Institute (QURI) and to present an alternative to ACE’s current rubric-based approach. Introduction and goals At QURI, we’

Some estimation work in the horizon

Too much work for any one group

Estimation for sanity checks

I feel very warmly about using relatively quick estimates to carry out sanity checks, i.e., to quickly check whether something is clearly off, whether some decision is clearly overdetermined, or whether someone is just bullshitting. This is in contrast to Fermi estimates, which aim to arrive at an estimate

Winners of the Squiggle Experimentation and 80,000 Hours Quantification Challenges

In the second half of 2022, we announced the Squiggle Experimentation Challenge and a $5k challenge to quantify the impact of 80,000 hours' top career paths. For the first contest, we got three long entries. For the second we got five, but most were fairly short. This post

Use of “I’d bet” on the EA Forum is mostly metaphorical

Epistemic status: much ado about nothing.

Straightforwardly eliciting probabilities from GPT-3

I explain two straightforward strategies for eliciting probabilities from language models, and in particular for GPT-3, provide code, and give my thoughts on what I would do if I were being more hardcore about this. Straightforward strategies Look at the probability of yes/no completion Given a binary question, like

Six Challenges with Criticism & Evaluation Around EA

Continuing on "Who do EAs Feel Comfortable Critiquing?”

An in-progress experiment to test how Laplace’s rule of succession performs in practice.

Summary I compiled a dataset of 206 mathematical conjectures together with the years in which they were posited. Then in a few years, I intend to check whether the probabilities implied by Laplace’s rule—which only depends on the number of years passed since a conjecture was created—are

My highly personal skepticism braindump on existential risk from artificial intelligence

Links to the EA Forum post and personal blog post Summary This document seeks to outline why I feel uneasy about high existential risk estimates from AGI (e.g., 80% doom by 2070). When I try to verbalize this, I view considerations like * selection effects at the level of which

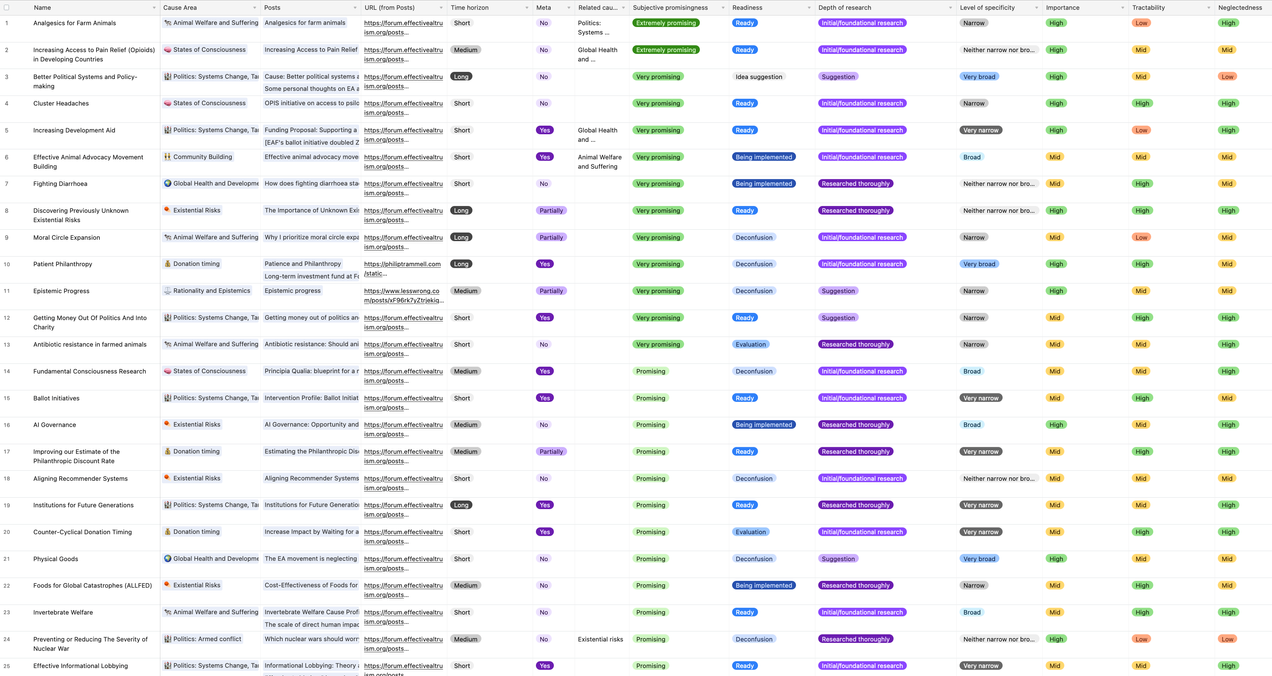

Interim Update on our Work on EA Cause Area Candidates

The story so far: * I constructed the original Big List of Cause Candidates in December 2020. * I spent some time thinking about the pipeline for new cause area ideas, not all of which is posted. * I tried to use a bounty system to update the list for next year but

Probing GPT-3's ability to produce new ideas in the style of Robin Hanson and others

Also posted in the EA Forum here. Brief description of the experiment I asked a language model to replicate a few patterns of generating insight that humanity hasn't really exploited much yet, such as: 1. Variations on "if you never miss a plane, you've been