Research

My highly personal skepticism braindump on existential risk from artificial intelligence

Links to the EA Forum post and personal blog post Summary This document seeks to outline why I feel uneasy about high existential risk estimates from AGI (e.g., 80% doom by 2070). When I try to verbalize this, I view considerations like * selection effects at the level of which

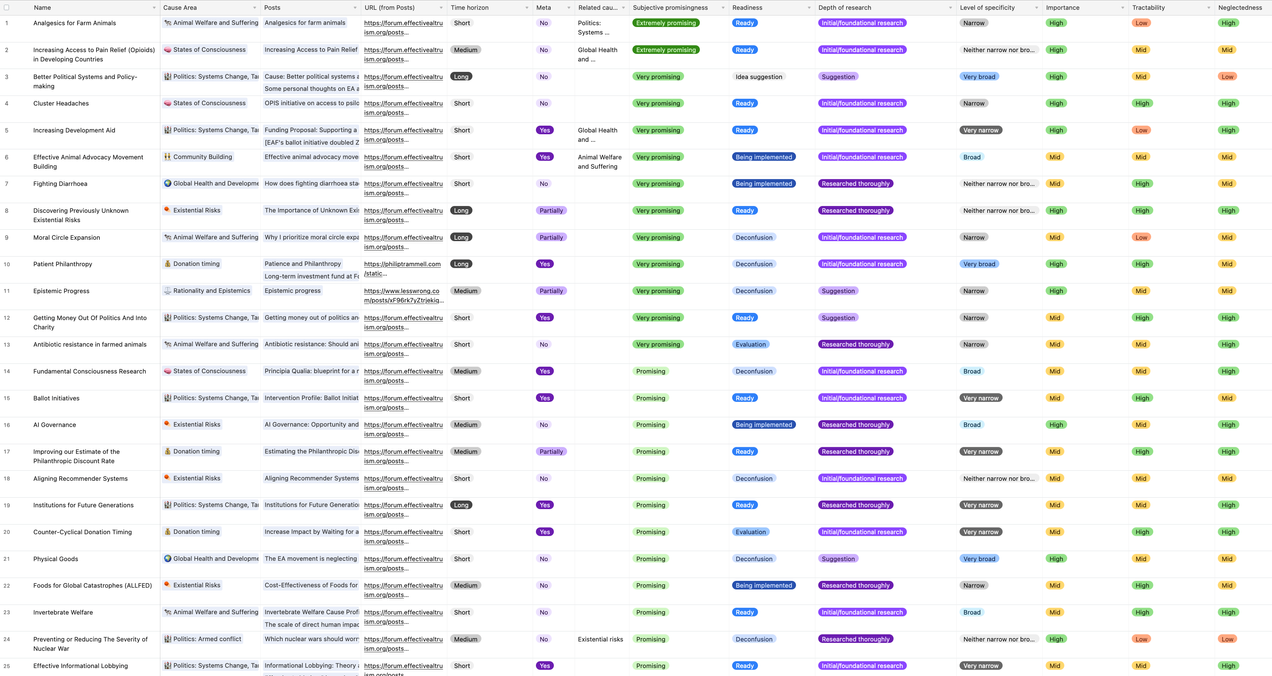

Interim Update on our Work on EA Cause Area Candidates

The story so far: * I constructed the original Big List of Cause Candidates in December 2020. * I spent some time thinking about the pipeline for new cause area ideas, not all of which is posted. * I tried to use a bounty system to update the list for next year but

Who do EAs Feel Comfortable Critiquing?

Many effective altruists seem to find it scary to critique each other

Probing GPT-3's ability to produce new ideas in the style of Robin Hanson and others

Also posted in the EA Forum here. Brief description of the experiment I asked a language model to replicate a few patterns of generating insight that humanity hasn't really exploited much yet, such as: 1. Variations on "if you never miss a plane, you've been

Can EAs use heated topics, in part, as learning opportunities?

Given that we're going through this anyway, maybe we could at least learn from it.

EA Could Use Better Internal Communications Infrastructure

EA is lacking in many areas of enterprise infrastructure. Internal communication feels like a pressing one.

14 Ways ML Could Improve Video

There's a long road ahead for machine learning and video, even pre-AGI

Why does Academia+EA produce so few online videos?

(A shorter version on Facebook, here. There are some good points in the comments.) Why are there so many in-person-first EA/academic presentations, but so few online-first presentations or videos? Presentations are a big deal in academia, but from what I can tell, they almost always seem to be mainly